☕️ MoCHA-former: Moiré-Conditioned Hybrid Adaptive Transformer for Video Demoiréing

Neurocomputing 2026

Chung-Ang University Chung-Ang University

Chung-Ang University Chung-Ang University  CMLab Creative Vision and Multimedia Lab (CMLab)

CMLab Creative Vision and Multimedia Lab (CMLab) Neurocomputing 2026

Chung-Ang University Chung-Ang University

Chung-Ang University Chung-Ang University  CMLab Creative Vision and Multimedia Lab (CMLab)

CMLab Creative Vision and Multimedia Lab (CMLab) TL;DR We present MoCHA-former, a video demoiréing transformer that decouples moiré from content and enforces spatio-temporal consistency, achieving state-of-the-art results on RAW and sRGB datasets.

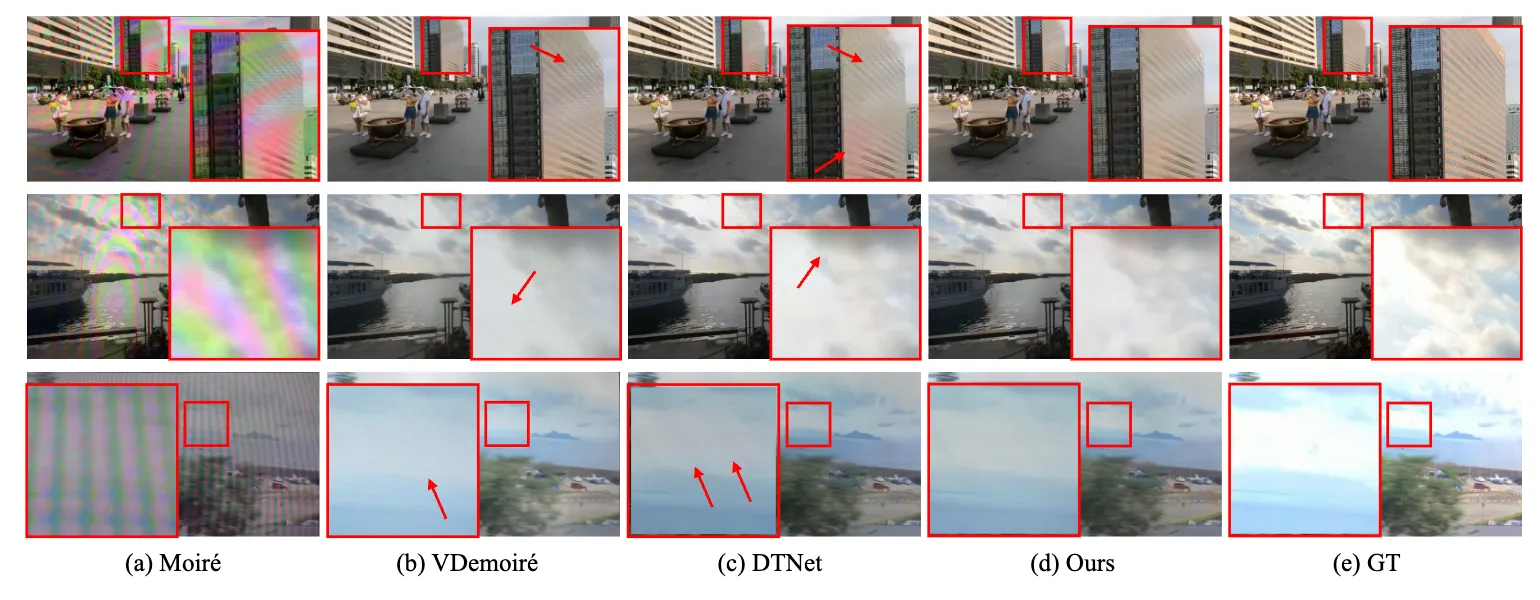

Comparison between the moiréd input video and the result of MoCHA-former.

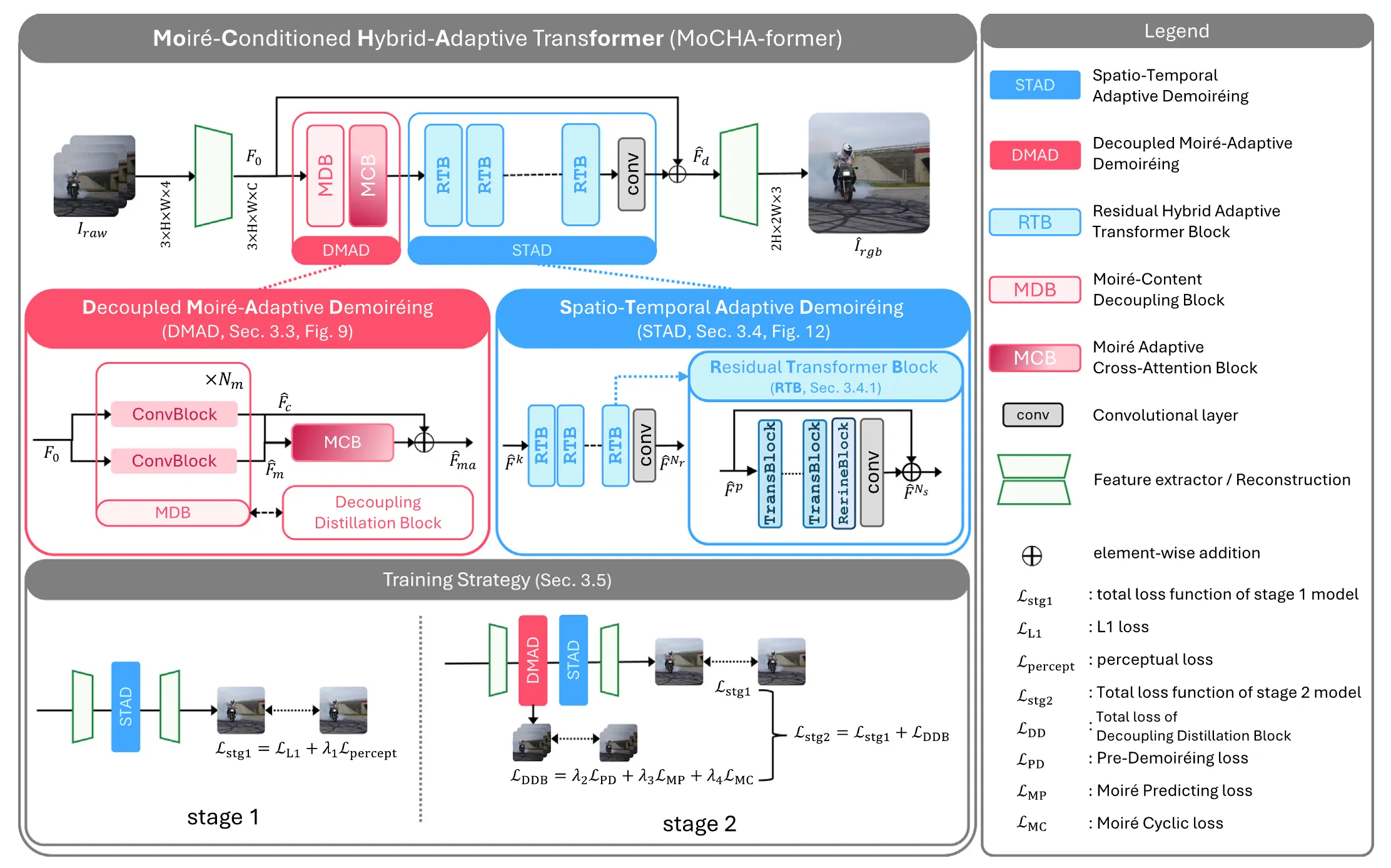

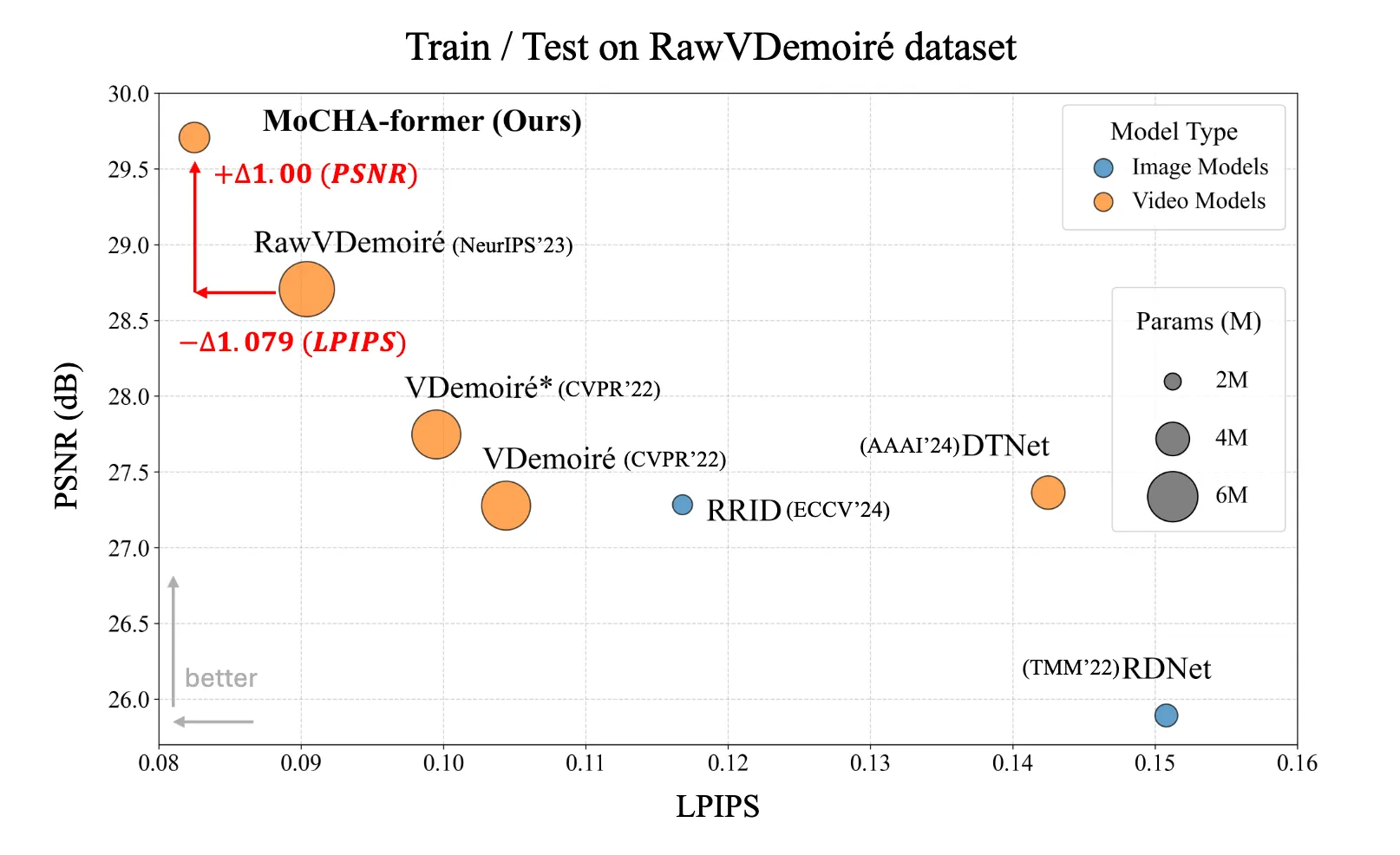

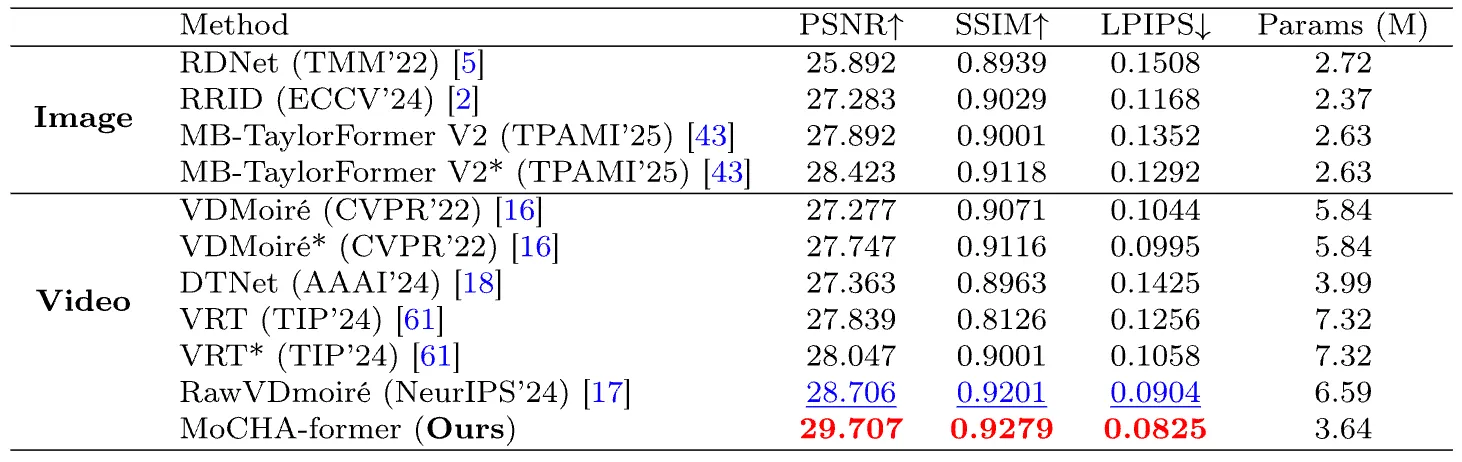

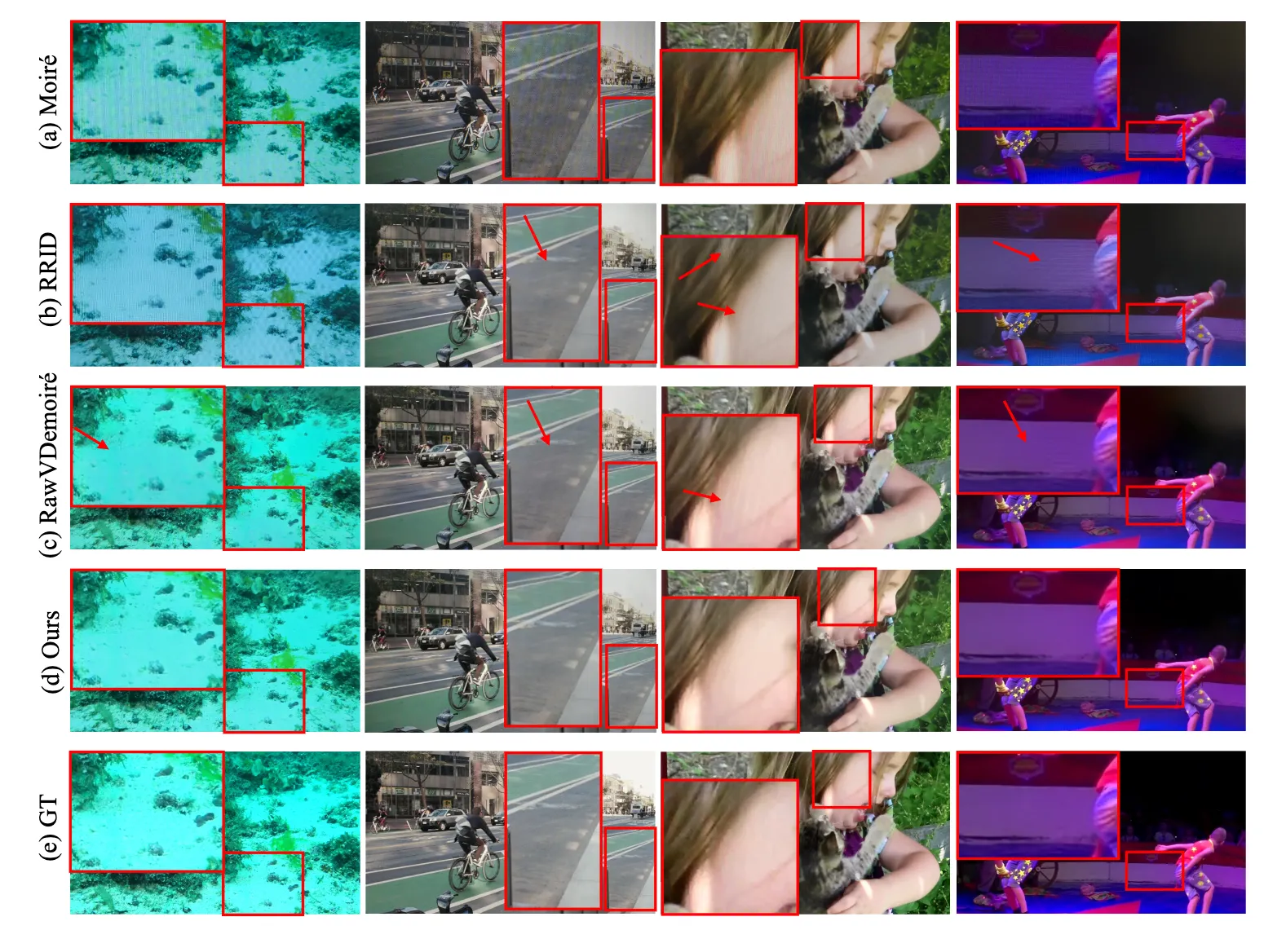

Recent advances in portable imaging have made camera-based screen capture ubiquitous. Unfortunately, frequency aliasing between the camera’s color filter array (CFA) and the display’s sub-pixels induces moiré patterns that severely degrade captured photos and videos. Although various demoiréing models have been proposed to remove such moiré patterns, these approaches still suffer from several limitations: (i) spatially varying artifact strength within a frame, (ii) large-scale and globally spreading structures, (iii) channel-dependent statistics and (iv) rapid temporal fluctuations across frames. We address these issues with the Moiré Conditioned Hybrid Adaptive Transformer (MoCHA-former), which comprises two key components: Decoupled Moiré Adaptive Demoiréing (DMAD) and Spatio-Temporal Adaptive Demoiréing (STAD). DMAD separates moiré and content via a Moiré Decoupling Block (MDB) and a Detail Decoupling Block (DDB), then produces moiré-adaptive features using a Moiré Conditioning Block (MCB) for targeted restoration. STAD introduces a Spatial Fusion Block (SFB) with window attention to capture large-scale structures, and a Feature Channel Attention (FCA) to model channel dependence in RAW frames. To ensure temporal consistency, MoCHA-former performs implicit frame alignment without any explicit alignment module. We analyze moiré characteristics through qualitative and quantitative studies, and evaluate on two video datasets covering RAW and sRGB domains. MoCHA-former consistently surpasses prior methods across PSNR, SSIM, and LPIPS.

Our proposed MoCHA-former consists of two components: (i) DMAD and (ii) STAD. DMAD aims to separate moiré patterns from content and generate moiré-adaptive features. STAD takes the moiré-adaptive features as input and focuses on removing moiré patterns in a spatio-temporal manner.

@article{sung2025mocha,

title={MoCHA-former: Moir{\'e}-conditioned hybrid adaptive transformer for video demoir{\'e}ing},

author={Sung, Jeahun and Roh, Changhyun and Eom, Chanho and Oh, Jihyong},

journal={Neurocomputing},

pages={132477},

year={2025},

publisher={Elsevier}

}